6 Analysing Spatial Patterns I: Geometric Operations and Spatial Queries

This week, we will be looking at the use of geometric operations and spatial queries within spatial data processing and analysis. Geometric operations and spatial queries are not really a theoretical topic per se in spatial “pattern” analysis but rather essential building blocks to overall spatial data processing and analysis. This is because - and the clue is in the name - they conduct incredibly useful operations and queries on or using the geometry of our data sets, from calculating the area covered by an individual polygon in an areal unit data set, or subsetting the spatial extent of a data set based on another, to running buffer and point-in-polygon calculations.

6.1 Lecture recording

You can access the Lecturecast recording here: [Link]. The slides for this week’s lecture can be downloaded here: [Link].

6.2 Reading list

Essential readings

- Lovelace, R., Nowosad, J. and Muenchow, J. 2021. Geocomputation with R, Chapter 4: Spatial data operations. [Link]

- Lovelace, R., Nowosad, J. and Muenchow, J. 2021. Geocomputation with R, Chapter 5: Geometry operations. [Link]

- Lovelace, R., Nowosad, J. and Muenchow, J. 2021. Geocomputation with R, Chapter 6: Reprojecting geographic data. [Link]

Suggested readings

- Houlden, V. et al. 2019. A spatial analysis of proximate greenspace and mental wellbeing in London. Applied Geography 109: 102036. [Link]

- Malleson, N. and Andresen, M. 2016. Exploring the impact of ambient population measures on London crime hotspots. Journal of Criminal Justice 46: 52-63. [Link]

- Oliver, L., Schuurman, N. and Wall, A. 2007. Comparing circular and network buffers to examine the influence of land use on walking for leisure and errands. International Journal of Health Geographics 6: 41. [Link]

6.3 Bike theft in London

This week, we will be investigating bike theft in London in 2019 - and look to confirm a very simple hypothesis: that bike theft primarily occurs near tube and train stations. We will be investigating its distribution across London using the point data provided within our crime data set. We will then compare this distribution to the location of train and tube stations using specific geometric operations and spatial queries that can compare the geometry of two (or more) data sets.

We will also learn how to download data from OpenStreetMap as well as use an interactive version of tmap to explore the distribution of the locations of individual bike theft against the locations of these stations.

6.3.1 Housekeeping

Let’s get ourselves ready to start our practical by first downloading the relevant data and loading this within our script.

Open a new script within your GEOG0030 project and save this script as wk6-bike-theft-analysis.r. At the top of your script, add the following metadata (substitute accordingly):

# Analysing bike theft and its relation to stations using geometric analysis

# Date: January 2022

# Author: Justin Before continuing, please install the following libraries through your console: osmdata, RColorBrewer, janitor

Within your script, add the following libraries for loading:

# libraries

library(tidyverse)

library(sf)

library(tmap)

library(janitor)

library(RColorBrewer)

library(osmdata)6.3.2 Loading data

This week, we will start off using three data sets:

- London Ward boundaries for 2018

- Crime in London for 2019 from data.police.uk

- Train and tube Stations from Transport for London

File download

| File | Type | Link |

|---|---|---|

| Crime in London 2019 | csv |

Download |

| Train and tube stations in London | kml |

Download |

Download the files above. Store your crime_all_2019_london.csv in your data/raw/crime folder. Move your tfl_stations.kml download to your raw data folder and create a new transport folder to contain it. The London Ward boundaries for 2019 should still be in your data/raw/boundaries folder.

Let’s first load our London Ward shapefile:

# read in our 2018 London Ward boundaries

london_ward_shp <- read_sf("data/raw/boundaries/2018/London_Ward.shp")Check the CRS of our london_ward_shp spatial dataframe:

# check the CRS for the 2018 London Ward boundaries

st_crs(london_ward_shp)## Coordinate Reference System:

## User input: OSGB 1936 / British National Grid

## wkt:

## PROJCRS["OSGB 1936 / British National Grid",

## BASEGEOGCRS["OSGB 1936",

## DATUM["OSGB 1936",

## ELLIPSOID["Airy 1830",6377563.396,299.3249646,

## LENGTHUNIT["metre",1]]],

## PRIMEM["Greenwich",0,

## ANGLEUNIT["degree",0.0174532925199433]],

## ID["EPSG",4277]],

## CONVERSION["British National Grid",

## METHOD["Transverse Mercator",

## ID["EPSG",9807]],

## PARAMETER["Latitude of natural origin",49,

## ANGLEUNIT["degree",0.0174532925199433],

## ID["EPSG",8801]],

## PARAMETER["Longitude of natural origin",-2,

## ANGLEUNIT["degree",0.0174532925199433],

## ID["EPSG",8802]],

## PARAMETER["Scale factor at natural origin",0.9996012717,

## SCALEUNIT["unity",1],

## ID["EPSG",8805]],

## PARAMETER["False easting",400000,

## LENGTHUNIT["metre",1],

## ID["EPSG",8806]],

## PARAMETER["False northing",-100000,

## LENGTHUNIT["metre",1],

## ID["EPSG",8807]]],

## CS[Cartesian,2],

## AXIS["(E)",east,

## ORDER[1],

## LENGTHUNIT["metre",1]],

## AXIS["(N)",north,

## ORDER[2],

## LENGTHUNIT["metre",1]],

## USAGE[

## SCOPE["Engineering survey, topographic mapping."],

## AREA["United Kingdom (UK) - offshore to boundary of UKCS within 49°45'N to 61°N and 9°W to 2°E; onshore Great Britain (England, Wales and Scotland). Isle of Man onshore."],

## BBOX[49.75,-9,61.01,2.01]],

## ID["EPSG",27700]]Of course it should be of no surprise that our london_ward_shp spatial dataframe is in BNG / ESPG: 27700, however, it is always good to check. Let’s go ahead and read in our tfl_stations data set:

# read in our London stations data set

london_stations <- read_sf("data/raw/transport/tfl_stations.kml")This data set is provided as a kml file, which stands for Keyhole Markup Language (KML). KML was originally created as a file format used to display geographic data in Google Earth. So we definitely need to check what CRS this data set is in and decide whether we will need to do some reprojecting.

# check the CRS for the London stations

st_crs(london_stations)## Coordinate Reference System:

## User input: WGS 84

## wkt:

## GEOGCRS["WGS 84",

## DATUM["World Geodetic System 1984",

## ELLIPSOID["WGS 84",6378137,298.257223563,

## LENGTHUNIT["metre",1]]],

## PRIMEM["Greenwich",0,

## ANGLEUNIT["degree",0.0174532925199433]],

## CS[ellipsoidal,2],

## AXIS["geodetic latitude (Lat)",north,

## ORDER[1],

## ANGLEUNIT["degree",0.0174532925199433]],

## AXIS["geodetic longitude (Lon)",east,

## ORDER[2],

## ANGLEUNIT["degree",0.0174532925199433]],

## ID["EPSG",4326]]The result informs us that we are going to need to reproject our data in order to use this dataframe with our london_ward_shp spatial dataframe. Luckily in R and the sf library, this reprojection is a relatively straight-forward transformation, requiring only one function: st_transform(). The function is very simple to use - you only need to provide the function with the data set and the code for the new CRS you wish to use with the data:

# reproject our data from WGS84 to BNG

london_stations <- st_transform(london_stations, 27700)We can double-check whether our new variable is in the correct CRS by using the st_crs() command:

# check the CRS for the London stations

st_crs(london_stations)## Coordinate Reference System:

## User input: EPSG:27700

## wkt:

## PROJCRS["OSGB 1936 / British National Grid",

## BASEGEOGCRS["OSGB 1936",

## DATUM["OSGB 1936",

## ELLIPSOID["Airy 1830",6377563.396,299.3249646,

## LENGTHUNIT["metre",1]]],

## PRIMEM["Greenwich",0,

## ANGLEUNIT["degree",0.0174532925199433]],

## ID["EPSG",4277]],

## CONVERSION["British National Grid",

## METHOD["Transverse Mercator",

## ID["EPSG",9807]],

## PARAMETER["Latitude of natural origin",49,

## ANGLEUNIT["degree",0.0174532925199433],

## ID["EPSG",8801]],

## PARAMETER["Longitude of natural origin",-2,

## ANGLEUNIT["degree",0.0174532925199433],

## ID["EPSG",8802]],

## PARAMETER["Scale factor at natural origin",0.9996012717,

## SCALEUNIT["unity",1],

## ID["EPSG",8805]],

## PARAMETER["False easting",400000,

## LENGTHUNIT["metre",1],

## ID["EPSG",8806]],

## PARAMETER["False northing",-100000,

## LENGTHUNIT["metre",1],

## ID["EPSG",8807]]],

## CS[Cartesian,2],

## AXIS["(E)",east,

## ORDER[1],

## LENGTHUNIT["metre",1]],

## AXIS["(N)",north,

## ORDER[2],

## LENGTHUNIT["metre",1]],

## USAGE[

## SCOPE["Engineering survey, topographic mapping."],

## AREA["United Kingdom (UK) - offshore to boundary of UKCS within 49°45'N to 61°N and 9°W to 2°E; onshore Great Britain (England, Wales and Scotland). Isle of Man onshore."],

## BBOX[49.75,-9,61.01,2.01]],

## ID["EPSG",27700]]You should see that our london_stations spatial dataframe is now in BNG / EPSG: 27700. We are now ready to load our final data set - our csv that contains our crime from 2019.

From this csv, we want to do three things:

- Extract only those crimes that are bicycle thefts, i.e.

crime_type == "bicycle theft". - Convert our

csvinto a spatial dataframe that shows the locations of our crimes, determined by the latitude and longitudes provided, as points. - Transform our data from WGS84 / 4326 to BNG / 27700.

Since we are getting pretty used to looking at code and cleaning data we should be able to chain these operations using the %>% operator:

# read in our crime data csv from our raw data folder

bike_theft_2019 <- read_csv("data/raw/crime/crime_all_2019_london.csv") %>%

# clean names with janitor

clean_names() %>%

# filter according to crime type and ensure we have no NAs in our data set

filter(crime_type == "Bicycle theft" & !is.na(longitude) & !is.na(latitude)) %>%

# select just the longitude and latitude columns

dplyr::select(longitude, latitude) %>%

# transform into a point spatial dataframe

# note providing the columns as the coordinates to use

# plus the CRS, which as our columns are long/lat is WGS84/4236

st_as_sf(coords = c("longitude", "latitude"), crs = 4236) %>%

# convert into BNG

st_transform(27700)We now have our three data sets loaded, it is time for a little data checking. We can see just from our Environment window that in total, we have 302 stations and 18,744 crimes to look at in our analysis. We can double-check the (Attribute) tables of our newly created spatial dataframes to see what data we have to work with.

You can either do this manually by clicking on the variable, or using commands such as head(), summary() and names() to get an understanding of our dataframe structures and the field names present - you can choose your approach, but make sure to look at your data.

As you should remember from the code above, for our bicycle theft data, we actually only have our geometry column because this is all that we extracted from our crime csv. For our london_stations spatial dataframe, we have a little more information, including the name of the station and its address - as well as its geometry.

Now, let’s nap all three layers of data onto a single map using tmap:

# plot our London Wards first

tm_shape(london_ward_shp) + tm_fill() +

# then add bike crime as blue

tm_shape(bike_theft_2019) + tm_dots(col = "blue") +

# then add our stations as red

tm_shape(london_stations) + tm_dots(col = "red") +

# then add a north arrow

tm_compass(type = "arrow", position = c("right", "bottom")) +

# then add a scale bar

tm_scale_bar(breaks = c(0, 5, 10, 15, 20), position = c("left", "bottom"))

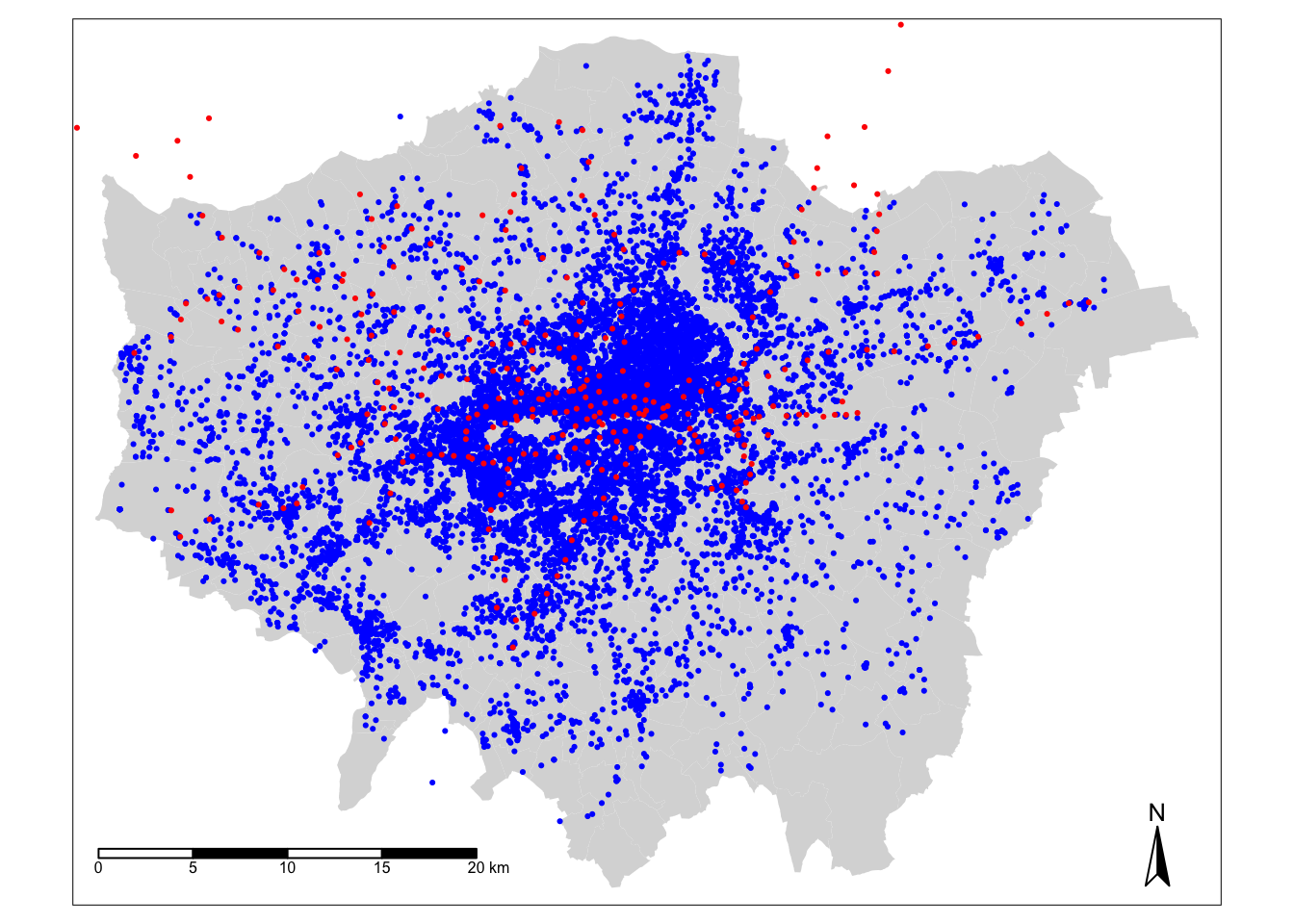

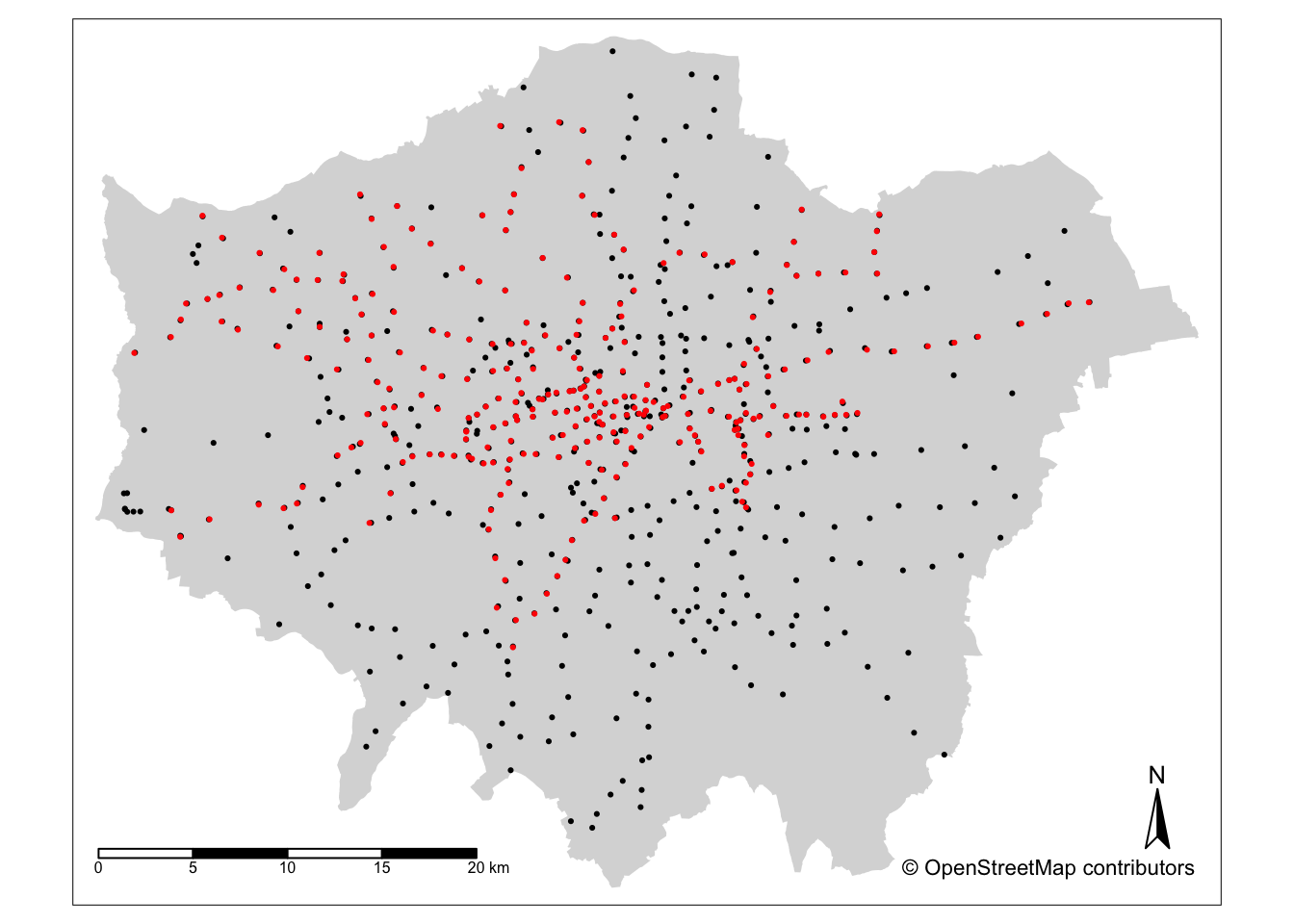

Let’s think about the distribution of our data - we can already see that our bike theft is obviously highly concentrated in the centre of London although we can certainly see some clusters in the Greater London areas. Let’s go ahead and temporally remove the bike theft data from our map for now to see where our tube and train stations are located.

To remove the bike data, simply put a comment sign in front of that piece of code and re-run the code:

# plot our London Wards first

tm_shape(london_ward_shp) + tm_fill() +

# then add bike crime as blue

# tm_shape(bike_theft_2019) + tm_dots(col="blue") +

# then add our stations as red

tm_shape(london_stations) + tm_dots(col = "red") +

# then add a north arrow

tm_compass(type = "arrow", position = c("right", "bottom")) +

# then add a scale bar

tm_scale_bar(breaks = c(0, 5, 10, 15, 20), position = c("left", "bottom"))

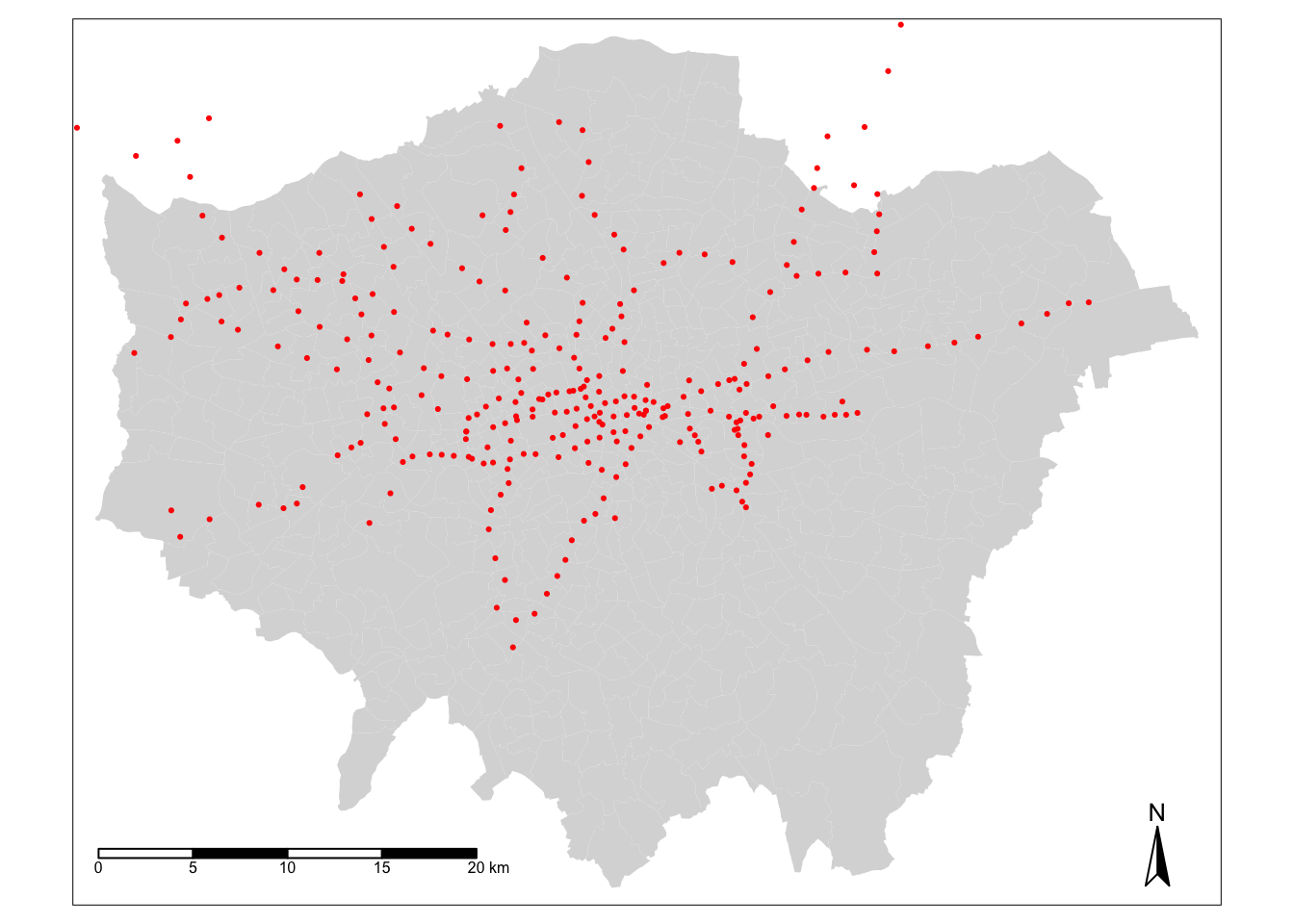

We can see our train and tube stations are only present in primarily the north of London - and not really present in the south. This is not quite right and as it turns out the data set only contains those train stations used by Transport for London within the tube network rather than all the stations in London. We will need to fix this before conducting our full analysis. But this isn’t the only problem with our data set - we can see that both our bike_theft spatial dataframe and our london_stations spatial dataframe extend beyond our London boundary.

6.3.3 Data preparation

When we want to reduce a data set to the spatial extent of another, there are two different approaches to conducting this in spatial analysis - a subset or a clip - which each deal with the geometry of the resulting data set in slightly different ways.

A clip-type operation works a bit like a cookie-cutter - it will take the geometry of “dough” layer (i.e. the layer you want to clip), places a “cookie-cutter” layer on top (i.e. the layer you want to clip by) and then returns only the dough contained within the cookie-cutter. This will mean that the geometry of our resulting “dough” layer will be modified, if it contains observation features that extend further than the “cookie-cutter” extent - it will literally “cut” the geometry of our data.

A subset-type operation is what is known in GIScience-speak as a select by location query - in this case, our subset will return the full geometry of each observation feature that intersects with our “clip when our”’“cookie-cutter” layer. Any geometry that does not intersect with our clip layer will be removed from the geometry of our resulting layer.

Luckily for us, as we are using point data, we can (theoretically) use either approach because it is not possible to split the geometry of a single point feature. However, if a point feature does fall on the same geometry as our “clip” layer, it will be excluded from our data set. When it comes to polygon and line data, not understanding the differences between the two approaches can lead you into difficulties with your data processing as there will be differences in the feature geometry between the clipped layer and the subset layer.

Each approach is implemented differently in R - and can actually be used together to speed up the efficiency in your code. To subset our data, we only need to use the base R library to selection using [] brackets:

# subset our bike_theft_2019 spatial dataframe by the london_ward_shp spatial

# dataframe

bike_theft_2019_subset <- bike_theft_2019[london_ward_shp, ]Conversely, if we want to clip our data, we need to use the st_intersection() function from the sf library.

# clip our bike_theft_2019 spatial dataframe by the london_ward_shp spatial

# dataframe

bike_theft_2019 <- bike_theft_2019 %>%

st_intersection(london_ward_shp)## Warning: attribute variables are assumed to be spatially constant throughout all

## geometriesNote

Which approach you use with future data is always dependent on the data set you want to use - and the output you need. For example, is keeping the geometry of your observation features in your data set important? Out of the two, the subset approach is the fastest to use as R is simply comparing the geometries rather than also editing the geometries.

Before we go ahead and sort out our london_stations spatial dataframe, we are going to look at how we can dissolve our london_ward_shp spatial dataframe into a single feature. Reducing a spatial dataframe to a single observation is often required when using R and sf‘s geometric operations to complete geometric comparisons. Sometimes, also, we simply want to map an outline of an area, such as London, rather than add in the additional spatial complexities of our wards. To achieve just a single ’observation’ that represents the outline geometry of our data set, we use the geometric operation, st_union().

Note

You can also use the st_union() function to union two data sets into one - this can be used to merge data together that are of the same spatial type.

Let’s go ahead and see if we can use this to create our London outline:

# use st_union to create a single outline of London from our london_ward_shp

# spatial dataframe

london_outline <- london_ward_shp %>%

st_union()You should see that our london_outline spatial data frame only has one observation. You can now go ahead and plot() your london_outline spatial dataframe from your console and see what it looks like:

Back to our train and tube stations. We have seen that our current london_stations spatial dataframe really does not provide the coverage of train stations in London that we expected. To add in our missing data, we will be using OpenStreetMap. If you have never come across OpenStreetMap (OSM) before, it is a free editable map of the world.

Note

OpenStreetMap’s spatial coverage is still unequal across the world - plus, as you will find if you use the data, the accuracy and quality of the data can often be quite questionable or simply missing attribute details that we would like to have, e.g. types of roads and their speed limits, to complete specific types of spatial analysis. As a result, do not expect OSM to contain every piece of spatial data that you would want.

Whilst there are various approaches to downloading data from OpenStreetMap, we will use the osmdata library to directly extract our required OpenStreetMap (OSM) data into a variable. The osmdata library grants access within R to the Overpass API that allows us to run queries on OSM data and then import the data as either sf or sp objects. These queries are at the heart of these data downloads.

To use the library (and API), we need to know how to write and run a query, which requires identifying the key and value that we need within our query to select the correct data. Essentially every map element (whether a point, line or polygon) in OSM is “tagged” with different attribute data. In our case, we are looking for train stations, which fall under the key, Public Transport, with a value of station as outlined in their wiki. These keys and values are used in our queries to extract only map elements of that feature type - to find out how a feature is “tagged” in OSM is simply a case of reading through the OSM documentation and becoming familiar with their keys and values.

In addition to this key-value pair, we also need to obtain the bounding box of where we want our data to be extracted from, i.e. London, to prevent OSM searching the whole map of the world for our feature (although the API query does have in-built time and spatial coverage limits to stop this from happening).

Let’s try to extract elements from OSM that are tagged as public_transport = station from OSM into an osmdata_sf() object:

# extract the coordinates from our London outline using the st_bbox() function

# note we also temporally reproject the london_outline spatial dataframe before obtaining the bbox

# we need our bbox coordinates in WGS84 (not BNG), hence reprojection

p_bbox <- st_bbox(st_transform(london_outline, 4326))

# pass our bounding box coordinates into the OverPassQuery (opq) function

london_stations_osm <- opq(bbox = p_bbox) %>%

# pipe this into the add_osm_feature data query function to extract our stations

add_osm_feature(key = "public_transport", value = "station") %>%

# pipe this into our osmdata_sf object

osmdata_sf()Note

In some cases this code may (temporarily) not work. If that is the case you can download the london_stations_osm object here: [Download]. After downloading, you can copy the file to your working directory and load the object using the load() function.

Note

When we download OSM data - and extract it as above - our query will return all elements tagged as our key-value pair into our osmdata_sf() OSM data object. This means all elements - any points, lines and polygons - associated with our tag will be returned. Obviously we would think with our public_transport = station tag, we would only return point data representing our train and tube stations in London. But if we use the summary() function on our london_stations_osm OSM data object, we can see that not only is a lot of other data stored in our OSM data object (including the bounding box we used within our query, plus metadata about our query), but our query has returned both points and polygons - currently stored within this OSM data object as individual spatial data frames.

To extract only the points of our tube and train stations from our london_stations_osm OSM data object, we simply need to extract this from the dataframe and store this under a separate variable. But, whenever you are dealing with OSM data, just remember that your query can return multiple different types of map elements (and their respective geometries), so always be clear in knowing which type of spatial data you will need and remember to extract this from your OSM data object.

Extract train station points from our OSM data object and process/clean ready for analysis:

# extract only the points data from the osmdata object

london_stations_osm <- london_stations_osm$osm_points %>%

# add projection to the point spatial dataframe

st_set_crs(4326) %>%

# reproject our data set to BNG

st_transform(27700) %>%

# clip to the London outline shapefile

st_intersection(london_outline) %>%

# select only attributes that seem relevant

dplyr::select(c("osm_id", "name", "network", "operator", "public_transport", "railway"))## Warning: attribute variables are assumed to be spatially constant throughout all

## geometries# inspect

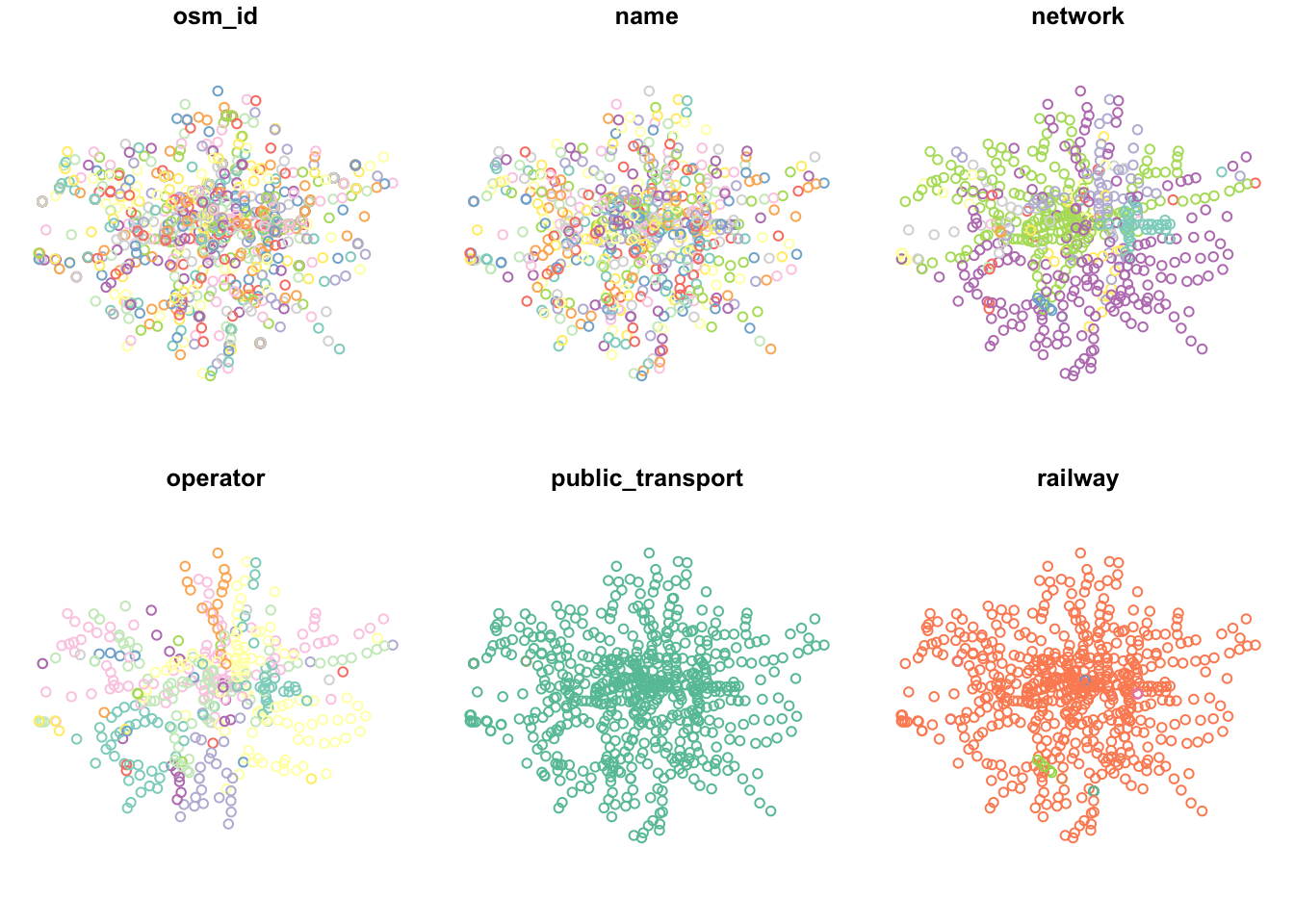

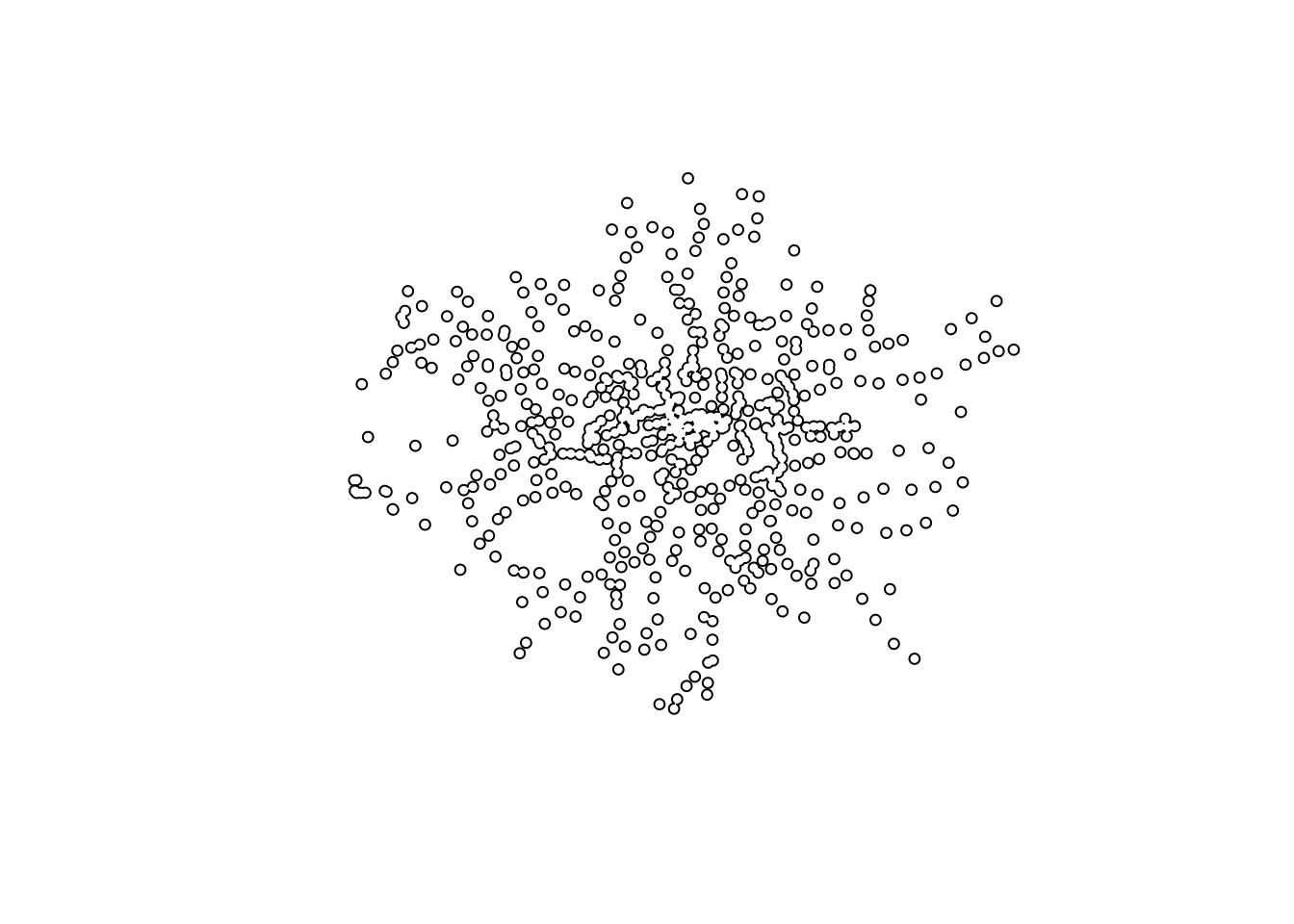

plot(london_stations_osm)

With the accuracy of OSM a little questionable, we want to complete some data validation tasks to check its quality and to confirm that it at least contains the data we see in our authoritative london_stations spatial dataframe. The total number of data points also seems rather high. In fact, a quick search online can tell us that there are 272 tube stations in the London network as well as 335 train stations in Greater London.

As we can see in our plot above, not all of our stations appear to be of the same value in our railway field. If we check the field using our count() function, you will see that there are some different values and NAs in our data set:

# inspect values

count(london_stations_osm, railway)## Simple feature collection with 6 features and 2 fields

## Geometry type: MULTIPOINT

## Dimension: XY

## Bounding box: xmin: 505078.2 ymin: 159027.2 xmax: 556185.7 ymax: 200138.6

## Projected CRS: OSGB 1936 / British National Grid

## railway n geometry

## 1 entrance 2 MULTIPOINT ((532814.8 16572...

## 2 station 593 MULTIPOINT ((505078.2 17673...

## 3 stop 2 MULTIPOINT ((513225.1 18452...

## 4 subway_entrance 7 MULTIPOINT ((512210.7 17613...

## 5 tram_stop 6 MULTIPOINT ((524730.4 17025...

## 6 <NA> 1034 MULTIPOINT ((505596.3 18419...As we can see, not everything in our london_stations_osm spatial dataframe is a ‘station’ as recorded by OSM and we have a high number of NAs which are unlikely to actually represent stations in London. The number of points marked as ‘station’ in the railway field are most likely the only points in our data set that represent actual stations in London. There is still a difference between the official numbers and the OSM extract, but we will go on and use the best information we have from this attribute and our search and remove all other points from our OSM data set:

# extract only the points that we think are actual train and tube stations

london_stations_osm <- london_stations_osm %>%

filter(railway == "station")We have now cleaned our london_stations_osm spatial dataframe to remove all those points within our data set that are not tagged as railway == "station". Our london_stations spatial dataframe is of course an authoritative data set from TfL, so we know at least that this data should be accurate - therefore, it would be great if we could compare our two data sets to one another spatially to double-check our london_stations_osm spatial dataframe contains all the data within our london_stations spatial dataframe.

We can first look at this by comparing their distributions visually on a map. But first, as our london_stations spatial dataframe still extends outside of London, we will go ahead and clip this:

# clip London stations

london_stations <- london_stations %>%

st_intersection(london_ward_shp)## Warning: attribute variables are assumed to be spatially constant throughout all

## geometriesMap our two spatial dataframes to compare their spatial coverage:

# plot our London Wards first with a grey background

tm_shape(london_outline) + tm_fill() +

# plot OSM station data in black

tm_shape(london_stations_osm) + tm_dots(col = "black") +

# plot TfL station data in red

tm_shape(london_stations) + tm_dots(col = "red") +

# add north arrow

tm_compass(type = "arrow", position = c("right", "bottom")) +

# add scale bar

tm_scale_bar(breaks = c(0, 5, 10, 15, 20), position = c("left", "bottom")) +

# add our OSM contributors statement

tm_credits("© OpenStreetMap contributors")

What we can see is that it looks like our OSM data actual does a much better job at covering all train and tube stations across London (not a surprise with 300 more stations in the data set) - but still it is pretty hard to get a sense of comparison from a static map like this whether it contains all of the tube and train stations in our london_stations spatial dataframe. An interactive map would enable us to interrogate the spatial coverage of our two station spatial dataframes further. To do so, we use the tmap_mode() function and change it from its default plot() mode to a view() model:

# change tmap mode to view / interactive mapping

tmap_mode("view")

# plot the outline of our London Wards first

tm_shape(london_outline) + tm_borders() +

# plot OSM station data in black

tm_shape(london_stations_osm) + tm_dots(col = "black") +

# plot TfL station data in red

tm_shape(london_stations) + tm_dots(col = "red")Using the interactive map, what we can see is that whilst we do have overlap with our data sets, and more importantly, our london_stations_osm spatial dataframe seems to contain all of the data within the london_stations spatial dataframe, although there are definitely differences in their precise location. Now depending on what level of accuracy we are willing to accept with our assumption that our OSM data contains the same data as our Transport for London data, we could leave our comparison here and move forward with our analysis. There are, however, several more steps we could complete to validate this assumption. The easiest first step is to simply reverse the order of our data sets to check that each london_stations spatial dataframe point is covered by reversing the drawing order:

# plot the outline of our London Wards first

tm_shape(london_outline) + tm_borders() +

# plot TfL station data in red

tm_shape(london_stations) + tm_dots(col = "red") +

# plot OSM station data in black

tm_shape(london_stations_osm) + tm_dots(col = "black")6.3.4 Spatial operations I

The comparision looks pretty good - but still the question is: can we be sure? Using geometric operations and spatial queries, we can look to find if any of our stations in our london_stations spatial dataframe are not present the london_stations_osm spatial dataframe. We can use specific geometric operations and/or queries that let us check whether or not all points within our london_stations spatial dataframe spatially intersect with our london_stations_osm spatial dataframe, i.e. we can complete the opposite of the clip/intersection that we conducted earlier. The issue we face, however is that, as we saw above, our points are slightly offset from one another as the data sets have ultimately given the same stations slightly different locations. This offset means we need to think a little about the geometric operation or spatial query that we want to use.

We will approach this question in two different ways to highlight the differences between geometric operations and spatial queries:

- We will use geometric operations to generate geometries that highlight missing stations from our

london_stationsspatial dataframe (i.e. ones that are not present in thelondon_stations_osmspatial dataframe.) - We will use spatial queries to provide us with a list of features in our

london_stationsspatial dataframe that do not meet our spatial requirements (i.e. are not present in thelondon_stations_osmspatial dataframe.)

6.3.4.1 Geometric operations

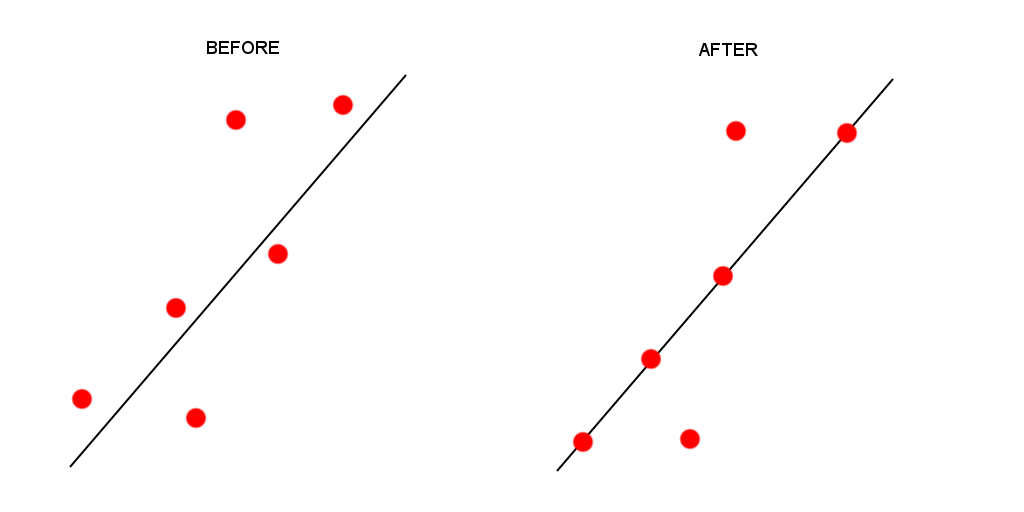

As highlighted above, the offset between our spatial dataframes adds a little complexity to our geometric operations code. To be able to make our direct spatial comparisons across our spatial dataframes, what we first need to do is try to snap the geometry of our london_stations spatial dataframe to our london_stations_osm spatial dataframe for points within a given distance threshold. This will mean that any points in the london_stations spatial dataframe that are within a specific distance of the london_stations_osm spatial dataframe will have their geometry changed to that of the london_stations_osm spatial dataframe.

Figure 6.1: Snapping points to a line. In our case we snap our points to other points.

By placing a threshold on this snap, we stop too many points moving about if they are unlikely to be representing the same station (e.g. further than 150m or so away) - but this still allows us to create more uniformity across our data sets’ geometries (and tries to reduce the uncertainty we add by completing this process).

Snap our our london_stations spatial dataframe to our london_stations_osm spatial dataframe for points within a 150m distance threshold:

# snap points

london_stations_snap <- st_snap(london_stations, london_stations_osm, 150)Now we have out snapped geometry, we can look to compare our two data sets to calculate whether or not our london_stations_osm spatial dataframe is missing any data from our london_stations_snap spatial dataframe.

To do so, we will use the st_difference() function which will return us the geometries of those points in our london_stations_snap spatial dataframe that are missing in our our london_stations_osm spatial dataframe. However, to use this function successfully (and this seems to be required for many of the comparison geometric operations in sf), we need to convert our our london_stations_osm spatial dataframe into a single geometry to enable this comparison.

To simplify our london_stations_osm spatial dataframe into a single geometry, we simply use the st_union() code we used with our London outline above:

# create a single geometry version of our london_stations_osm spatial dataframe

london_stations_osm_compare <- london_stations_osm %>%

st_union()

# compare our two point geometries to identify missing stations

missing_stations <- st_difference(london_stations_snap, london_stations_osm_compare)## Warning: attribute variables are assumed to be spatially constant throughout all

## geometriesYou should now find that we have an answer - and apparently have 3 missing stations with our london_stations_osm spatial dataframe. We can now plot our missing stations against our london_stations_osm spatial dataframe and identify whether these stations are missing or not!

# plot our london_stations_osm spatial dataframe in black

tm_shape(london_stations_osm) + tm_dots(col = "black") +

# plot our missing_stations spatial dataframe in green

tm_shape(missing_stations) + tm_dots(col = "green")Zoom in to our three missing stations - are these stations actually missing, or simply are the two locations (between the TfL data set and the OSM data set) too far apart that our snapping did not work?

If you investigate these three missing stations, you can actually see that our london_stations_osm spatial dataframe data set is actually more accurate than the TfL locations. All three missing stations were not in fact missing, but a) in our OSM data set but b) at a greater offset than 150m - and in all three cases, or OSM locations were more accurate. We can safely suggest that we can move forward with only using the london_stations_osm spatial dataframe and do not need to follow through with adding any more data to this data set.

6.3.4.2 Spatial queries

Before we go ahead and move forward with our analysis, we woll have a look at how we can implement the above quantification using spatial queries instead of geometric operations. Usually, when we want to find out if two spatial dataframes have the same or similar geometries, we would use one of the following queries:

st_equals()st_intersects()st_crosses()st_overlaps()st_touches()

Ultimately which query (or spatial relationship conceptualisation) you would choose would depend on the qualifications you are trying to place on your data set. In our case, considering our london_stations spatial dataframe and our london_stations_osm spatial dataframe, we have to consider the offset between our data sets. We could, of course, snap our spatial dataframe as above - but wouldn’t it be great if we could skip this step?

To do so, instead of snapping the london_stations spatial dataframe to the london_stations_osm spatial dataframe, we can use the st_is_within_distance() spatial query to ask whether our points in our london_stations spatial dataframe are within 150m of our london_stations_osm spatial dataframe. This ultimately means we can skip the snapping and st_difference() steps - and complete our processing in two simple steps.

Note

one thing to be aware of when running spatial queries in R and sf is that whichever spatial dataframe is the comparison geometry (i.e. spatial dataframe y in our queries), this spatial dataframe must ( be a single geometry - as we saw above in our st_difference() geometric operation. If it is not a single geometry, then the query will be run x number of observations * y spatial dataframe number of observations times, which is not the output that we want. By converting our comparison spatial dataframe to a single geometry, the query is only run for the number of observations in x.

You should also be aware that any of our spatial queries will return one of two potential outputs: a list detailing the indexes of all those observation features in x that do intersect with y, or a matrix that contains a TRUE or FALSE statement about this relationship. To define whether we want a list or a matrix output, we set the sparse parameter within our query to TRUE or FALSE respectively.

Query whether the points in our london_stations spatial dataframe are within 150m of our london_stations_osm spatial dataframe:

# create a single geometry version of our `london_stations_osm` spatial

# dataframe for comparison

london_stations_osm_compare <- london_stations_osm %>%

st_union()

# compare our two point geometries to identify missing stations

london_stations$in_osm_data <- st_is_within_distance(london_stations, london_stations_osm_compare,

dist = 150, sparse = FALSE)We can go ahead and count() the results of our query.

# count the number of stations within 150m of our the OSM spatial dataframe

count(london_stations, in_osm_data)## Simple feature collection with 2 features and 2 fields

## Geometry type: MULTIPOINT

## Dimension: XY

## Bounding box: xmin: 505625.9 ymin: 168579.9 xmax: 556144.8 ymax: 196387.1

## Projected CRS: OSGB 1936 / British National Grid

## # A tibble: 2 × 3

## in_osm_data[,1] n geometry

## * <lgl> <int> <MULTIPOINT [m]>

## 1 FALSE 3 ((533644.3 188926.1), (537592.5 179814.9), (538301.6 17…

## 2 TRUE 283 ((505625.9 184164.2), (507565.2 185008.3), (507584.9 17…Great - we can see we have 3 stations missing (3 FALSE observations), just like we had in our geometric operations approach. We can double-check this by mapping our two layers together:

# plot our london_stations by the in_osm_data column

tm_shape(london_stations) + tm_dots(col = "in_osm_data") +

# add our missing_stations spatial dataframe

tm_shape(missing_stations) + tm_dots(col = "green")You can turn on and off the missing_stations layer to compare the locations of these points to the FALSE stations within our london_stations_osm spatial dataframe, generated by our spatial query.

6.3.4.3 File export

Now we have done our checks and we know that we can move forward with using our tube and train station file, we should save a copy for usage at a later stage. Save your london_stations_osm spatial dataframe under the transport folder in your raw directory as a shapefile:

# write out london_stations_osm to a shapefile

st_write(london_stations_osm, "data/raw/transport/osm_stations.shp")6.3.5 Spatial operations II

We now have our London bike theft and train stations ready for analysis - we just need to complete one last step of processing with this data set - calculating whether or not a bike theft occurs near to a train station. As above, we can use both geometric operations or spatial queries to complete this analysis.

6.3.5.1 Geometric operations

Our first approach using geometric operations will involve the creation of a buffer around each train station to then identify which bike thefts occur within 400m of a train or tube station. Once again, the sf library has a function for generating buffers - we just need to know how to deploy it successfully on our london_stations_osm spatial dataframe.

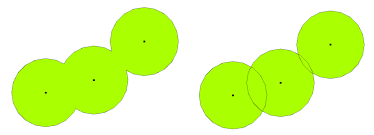

When it comes to buffers, we need to consider two main things - what distance will we use (and are we in the right CRS to use a buffer) and whether we want individual buffers or a single buffer.

Figure 6.2: A single versus multiple buffer. The single buffer represents a dissolved version of the multiple buffer option. Source: Q-GIS, 2020.

In terms of CRS, we want to make sure we use a CRS that defines its measurement units in metres - this is because it makes life significantly easier when calculating these buffers. If our CRS does not use metres as its measurement unit, it might be in a base unit of an Arc Degree or something else that creates difficulties when converting between a required metre distance and the measurement unit of that CRS. In our case, we are using British National Grid and, luckily for us, the units of the CRS is metres, so we do not need to worry about this.

In terms of determing whether we can create a single or multiple buffers, we can investigate the documentation of the function st_buffer() to find out what additional parameters it takes - and how. What we can find out is that we need to (of course!) provide a distance for our buffer - but whatever figure we supply, this will be interpreted within the units of the CRS we are using. Fortunately none of this is our concern - we know we can simply input the figure or 400 into our buffer and this will generate a buffer of 400m.

# generate a 400m buffer around our london_stations_osm data set, union it to

# create one buffer

station_400m_buffer <- london_stations_osm %>%

st_buffer(dist = 400) %>%

st_union()You can then go ahead and plot our buffer to see the results, entering plot(station_400m_buffer) within the console:

To find out which bike thefts have occured within 400m of a station, we will use the st_intersects() function.

Note

Before we move forward, one thing to clarify is that there is a difference between st_intersects() and the st_intersections() function we have been used so far. Unlike the st_intersections() function which creates a ‘clip’ of our data set, i.e. produces a new spatial dataframe containing the clipped geometry, the st_intersects() function simply identifies whether “x and y geometry share any space”. As explained above, as with all spatial queries, the st_intersects() function can produce two different outputs: either a list detailing the indexes of all those observation features in x that do intersect with y or a matrix that contains a TRUE or FALSE statement about this relationship. As with our previous spatial query, we will continue to use the matrix approach - this means for every single bike theft in London, we will know whether or not it occured within our chosen distance of a train station and join this as a new column to our bike_theft_2019 spatial dataframe.

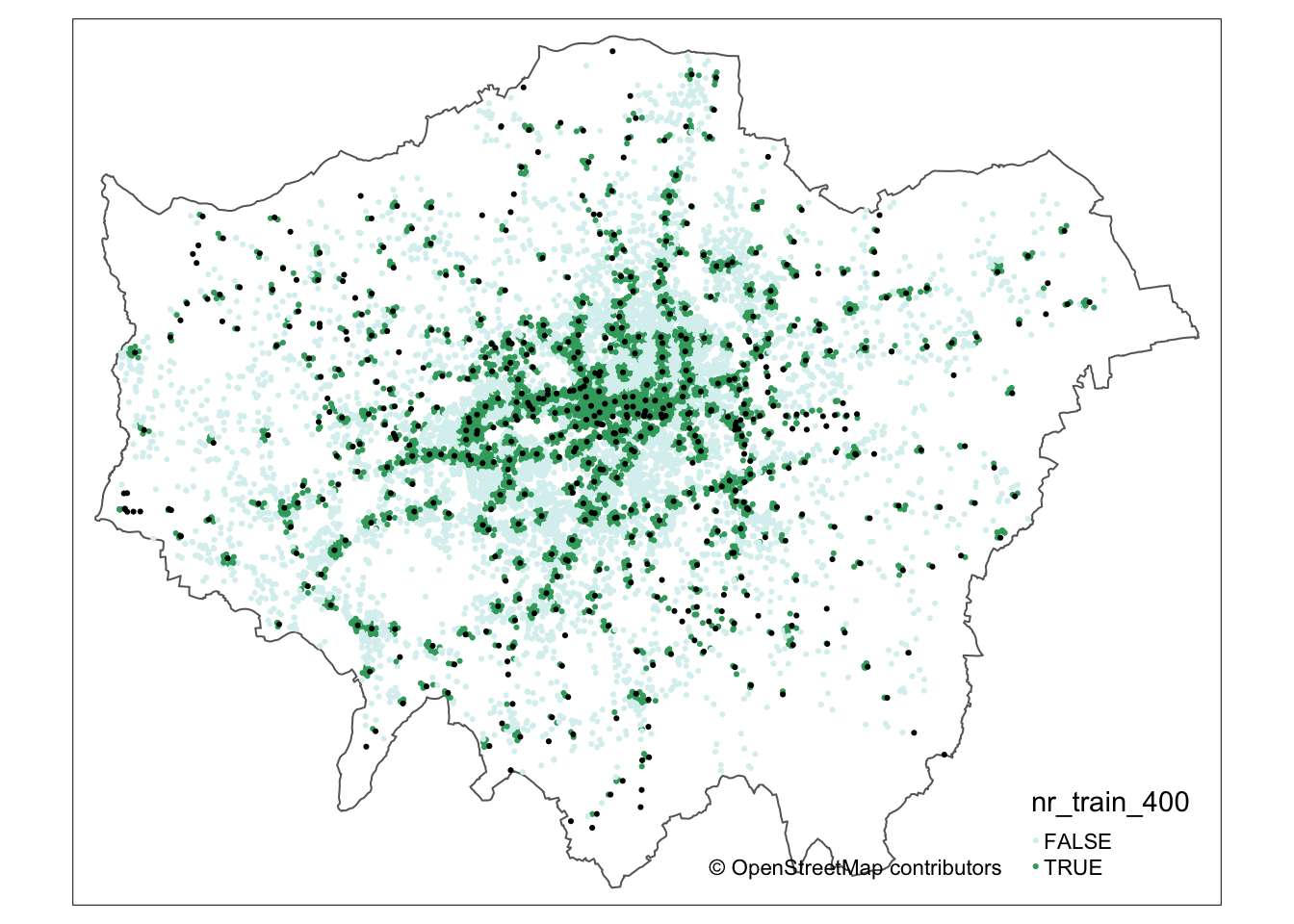

To detect which bike thefts occur within 400m of a train or tube station, we can do:

# test whether a bike theft intersects with our station buffer and store this

# as a new column called nr_train_400m

bike_theft_2019$nr_train_400 <- bike_theft_2019 %>%

st_intersects(station_400m_buffer, sparse = FALSE)We could go ahead and recode this to create a 1 or 0, or YES or NO after processing, but for now we will leave it as TRUE or FALSE. We can go ahead and now visualise our bike_theft_2019 based on this column, to see those occuring near to a train station:

# set tmap back to drawing mode

tmap_mode("plot")

# plot our london outline border

tm_shape(london_outline) + tm_borders() +

# plot our bike theft and visualise the nr_train_400m column

tm_shape(bike_theft_2019) + tm_dots(col = "nr_train_400", palette = "BuGn") +

# add our train stations on top

tm_shape(london_stations_osm) + tm_dots(palette = "gray") +

# add our OSM contributors statement

tm_credits("© OpenStreetMap contributors")

It should be of no surprise that visually we can of course see some defined clusters of our points around the various train stations. We can then utilise this resulting data set to calculate the percentage of bike thefts have occured at this distance - but first we will look at the spatial query approach to obtaining the same data.

6.3.5.2 Spatial queries

Like earlier, we can use the st_is_within_distance() function to identify those bike thefts that fall within 400m of a tube or train station in London.

We will again need to use the single geometry version of our london_stations_osm spatial dataframe for this comparison - and again use sparse = FALSE to create a matrix that we simply join back to our bike_theft_2019 spatial dataframe as a new column:

# compare our two point geometries to identify missing stations

bike_theft_2019$nr_train_400_sq <- st_is_within_distance(bike_theft_2019, london_stations_osm_compare,

dist = 400, sparse = FALSE)We can compare the outputs of our two different approaches to check that we have the same output - either through our count() or mapping approach. If you were to do this you will see that we have achieved the exact same output - with fewer lines of code and, as a result, quicker processing. However, unlike with the geometric operations, we do not have a buffer to visualise this distance around a train station, which we might want to do for maps in a report or presentation, for example. Once again, it will be up to you to determine which approach you prefer to use - some people prefer using the more visual techniques of geometric operations, whereas others might find spatial queries to answer the same questions.

6.3.5.3 Theft at train and tube locations?

Now we have, for each bike theft in our bike_theft_2019 spatial dataframe, an attribute of whether it occurs within 400m of a train or tube station.

We can quite quickly use the count() function to find out just how many thefts these clusters of theft represent.

# count the number of bike thefts occuring 400m within a station

count(bike_theft_2019, nr_train_400)## Simple feature collection with 2 features and 2 fields

## Geometry type: MULTIPOINT

## Dimension: XY

## Bounding box: xmin: 504861.2 ymin: 159638.3 xmax: 556969.7 ymax: 199879.7

## Projected CRS: OSGB 1936 / British National Grid

## # A tibble: 2 × 3

## nr_train_400[,1] n geometry

## * <lgl> <int> <MULTIPOINT [m]>

## 1 FALSE 8524 ((504907.2 183392.4), (504937.2 184142.4), (504952.2 1…

## 2 TRUE 10211 ((504861.2 175885.4), (505339.2 184226.4), (505394.1 1…Over 40 per cent of bike thefts occur within 400m of a train station - that is quite a staggering amount, but if we consider commuter behaviour - and certain “crime theories” such as routine activity theory - this occurence is not be unexpected. After all, if a bike is left at a train station, it is unlikely to be watched by a ‘suitable guardian’ nor is a simple bike lock likely to deter a thief, making it a ‘suitable target’. Furthermore, train stations are often located in areas of high areas of daytime populations - particularly in London. Overall, our main hypothesis - that bike thefts occur primarily near train and tube stations - is perhaps not quite proven, but so far we have managed to quantify that a substantial amount of bike thefts do occur within 400m of these areas.

In a final step, we will conduct a very familiar procedure: aggregating our data to the ward level. At the moment, we have now calculated for each bike theft whether or not it occurs within 400m of a tube or train station. We can use this to see if specific wards are hotspots of bike crimes near stations across London. To do this, we will be using the same process we used in QGIS - counting the number of points in each of our polygons, i.e. the number of bike thefts in each ward.

To create a PIP count within sf, we use the st_intersects() function again - but instead of using the matrix output of TRUE or FALSE that we have used before, what we actually want to extract from our function is the total number of points it identifies as intersecting with our london_ward_shp spatial dataframe. To achieve this, we use the lengths() function from the base R package to count the number of wards returned within the index list its sparse output creates.

Remember, this sparse output creates a list of the bike thefts (by their index) that intersect with each ward - therefore, the lengths() function will return the length of this list, i.e. how many bike thefts each ward contains or, in other words, a Point-in-Polygon count. This time around therefore we do not set the sparse function to FALSE but leave it as TRUE (its default) by not entering the parameter. As a result, we can calculate the number of bike thefts per ward and the number of bike thefts within 400m of a station per ward and use this to generate a theft rate for each ward of the number of bikes thefts that occur near a train station for identification of these hotspots.

Calculate the number of bike thefts per ward and the number of bike thefts within 400m of a station per ward using the PIP operation via st_intersects():

# run a PIP for total number of bike thefts occuring within a ward using

# st_intersects function

london_ward_shp$total_bike_theft <- lengths(st_intersects(london_ward_shp, bike_theft_2019))

# run a PIP for number of bike thefts within 400m of train station within a

# ward using st_intersects function

london_ward_shp$nr_station_bike_theft <- lengths(st_intersects(london_ward_shp, filter(bike_theft_2019,

nr_train_400 == TRUE)))As you can see from the code above, we have now calculated our total bike theft and bike theft near a train station for each ward. The final step in our processing therefore is to create our rate of potentially station-related bike theft = bike theft near train station / total bike theft.

Note

We are looking specifically at the phenomena of whether bike theft occurs near to a train or tube station or not. By normalising by the total bike theft, we are creating a rate that shows specifically where there are hotspots of bike theft near train stations. This, however, will be of course influenced by the number of train stations within a ward, the size of the ward, and of course the number of bikes and potentially daytime and residential populations within an area.

Calculate the rate of bike theft within 400m of a train or tube station out of all bike thefts for each ward:

# calculate the rate of bike thefts within 400m of train or tube station

london_ward_shp$btns_rate <- (london_ward_shp$nr_station_bike_theft/london_ward_shp$total_bike_theft) *

1006.4 Assignment

Now we worked through all this, for this week’s assignment:

- Create a proper map of the rate of bike thefts within 400m of a train or tube station.

- Re-run the above analysis at four more distances: 100m, 200m, 300m, 500m and calculate the percentage of bike theft at these different distances. You can choose whether you would like to use the geometric operations or spatial queries approach. What do you think could explain the substantial differences in counts as we increase away from the train station from 100 to 200m?

6.5 Before you leave

And that is how you can conduct basic geometric operations and spatial queries using R and sf. Of course: more RGIS in the coming weeks, but this concludes the tutorial for this week.